Cross-host Container Networks - UDP mode

In the previous article, we walked through containers network in one Linux host. However, by default Docker configuration, one container is unable to communicate with the other container in different host.

In order to solve cross-host container communication problem, there are many solutions. We will go through some of them one by one. First and very famous one is Flannel CNI.

Flannel CNI

Flannel CNI project is the one of the projects developed by CoreOS. Even though CoreOS has stopped maintenance, Flannel is still the one option of Kubernetes network CNI solution. Flannel provides three implementation mode at the back-end.

- VXLAN

- Host-GW

- UDP

UDP Mode

Flannel supported UDP implementation in the early stage. It was obsoleted and replaced by VXLAN due to the performance issue. However, the idea is straight forward and easy to understand.

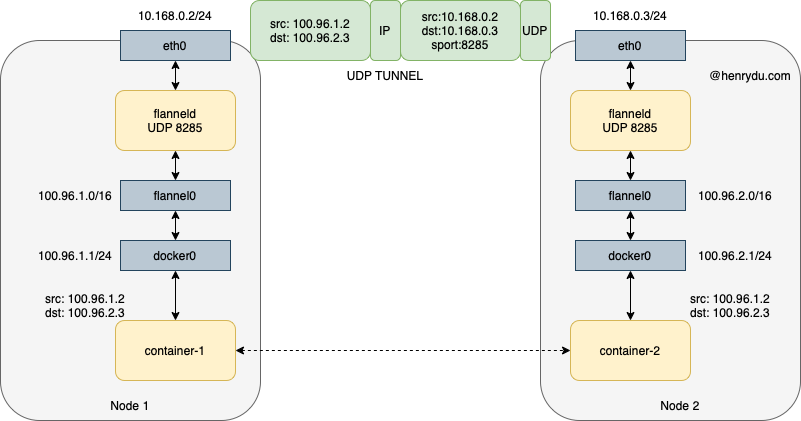

Let’s have an environment with two hosts

- Node 1 has a container-1 with IP 100.96.1.2. The docker0 IP is 100.96.1.1/24.

- Node 2 has a container-2 with IP 100.96.2.3. The docker0 IP is 100.96.2.1/24.

We will go through the network flow how container-1 accesses container-2.

When container-1 prepared the IP packet, the source IP is 100.96.1.2, the destination IP is 100.96.2.3. The destination IP will match the default entry in routing table, which will be forwarded to docker0 bridge. Then, the Node 1 host network will have this IP packet.

With Flannel implementation, it creates the extra routing rule for the containers network.

$ ip route

default via 10.168.0.1 dev eth0

100.96.0.0/16 dev flannel0 proto kernel scope link src 100.96.1.0

100.96.1.0/24 dev docker0 proto kernel scope link src 100.96.1.1

10.168.0.0./24 dev eth0 proto kernel scope link src 10.168.0.2

As we can see from routing table, destination IP 100.96.2.3 matches the routing rule to the device flannel0 bridge. The device flannel0 is a TUN device (Tunnel device), which, in Linux, is the network layer 3 virtual device to pass the network packet between user space and kernel space.

After Linux kernel forwards a network packet to flannel0 device, it will forward it into an user space application. In this case, the application is flanneld. Like wise, after flanneld forwards a network packet to flannel0 device, it will forward to it into kernel space to be handled by kernel network stack.

Therefore, it is flanneld running on Node 1 receives the IP packet from the container-1. When flanneld see the destination IP is 100.96.2.3, it forward it to Node 2.

However, how fallneld knows the destination container-2 is running on Node 2 host?

Flannel Subnet

In Flannel container network, all containers in one host belong to the subnet that assigned by Flannel network. In our example, the Node 1 subnet is 100.96.1.0/24, so container-1 has an IP 100.96.1.2. The Node 2 subnet is 100.96.2.0/24, so container-2 has an IP 100.96.2.3. They are saved in Etcd. We could use etcdctl tool to view them.

etcdctl ls /coreos.com/network/subnets

/coreos.com/network/subnets/100.96.1.0-24

/coreos.com/network/subnets/100.96.2.0-24

/coreos.com/network/subnets/100.96.3.0-24

When flanneld process the IP packets from flannel0 device, it will match the destination IP address to the subnet. From Etcd, the matched subnet maps the host IP address, which is the Node 2 IP address.

etcdctl get /coreos.com/network/subnets/100.96.2.0-24

{"PublicIP":"10.168.0.3"}

After get the host IP address, flanneld encapsulates the IP packets into a UDP packet. Obviously, the source address of the UDP packet is Node 1 IP, the destination address of the UDP packet is Node 2 IP. The UDP port is 8285. Therefore, flanneld is running on every node and listening on UDP port 8285.

After Node 2 receives the UDP packet, flanneld is able to extract the packet to get the real IP packet that sending from container-1 from Node 1. Then, it will forward the IP packet to a TUN device - flannel0. This device will forward the network packet from user space to kernel space.

According to the routing table of Node 2.

$ ip route

default via 10.168.0.1 dev eth0

100.96.0.0/16 dev flannel0 proto kernel scope link src 100.96.2.0

100.96.2.0/24 dev docker0 proto kernel scope link src 100.96.2.1

10.168.0.0./24 dev eth0 proto kernel scope link src 10.168.0.3

The destination IP of the packet is 100.96.2.3. It matches the third entry. Linux kernel will forward this packet into docker0 device. Consequently, via Veth pair, the container-2 receives the packet.

From routing tables of two hosts, we observed that, the docker0 subnet has to belong to Flannel assigned subnets. It can be achieved by setting bip parameter when starting docker daemon.

$ FLANNEL_SUBNET=100.96.1.1/24

$ dockerd ---bip=$FLANNEL_SUBNET

As diagram shown, the Flannel UDP implementation is actually a layer 3 overlay network. The flanneld running on each host will encapsulate the IP packets by UDP packet from its containers and communicate via UDP port 8285 between the hosts.

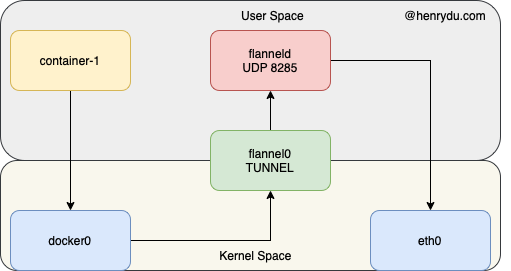

UDP Mode Performance

Flannel UDP mode has a performance issue, and it was obsoleted. The reason is that, when flanneld provides the layer 3 overlay network, it uses flannel0 TUN device. When sending out an IP packet, it will go through the TUN device three times. It means, there will be three times switches between user space and kernel space.

- User space container-1 sends an IP packet. It goes to kernel space via

docker0device. - The IP packet from kernel space goes to

flannelduser space viaflannel0TUN device. flanneldprepares UDP packets and forward it into kernel space and sends out viaeth0device.

In addition, the encapsulation and decapsulation are done in user space. In Linux, there is a higher cost to do the context switch and user/kernel space switch.

Therefore, in order to reduce the cost, we could put encapsulation and decapsulation into the kernel space. This is the reason why Flannel mainly support VXLAN mode currently.

Reference

Deep Dive Kubernetes: Lei Zhang, a TOC member of CNCF.