Container Networks

Network Namespace

Linux container network stack is isolated in its own network namespace. The network stack includes: Network Interface, Loopback Device, Routing Table and iptables chains/rules. The containerized process will use its own network stack to send and response the network request. If the running container wants to use the host network stack, the --net=host option will be used.

Let’s start a Nginx container by sharing the host network.

docker run -d --net=host -p 80:80 --name nginx-host nginx

After the Nginx starts, it will listen on TCP port 80 in the host network. Since the container will share the same host network stack, it is unavoidable that some processes will share the same port and binding the port fails. In most cases, we will set the containers to have their own network space.

root@docker-desktop:/# netstat -tpln

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1/nginx: master pro

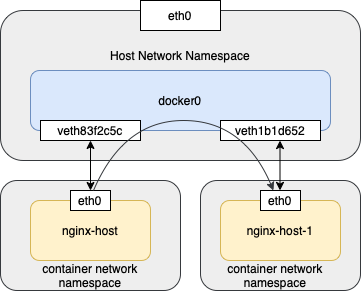

It comes a question, how containers in one host communicate with each other. Every container has its own network namespace. If we want them to be able to send network traffic, it has to be a virtual network cable connect them. The Veth Pair device comes to the place.

Let’s restart a Nginx container by its own network namespace. Then, we could continue next section.

docker run -d -p 80:80 --name nginx-host nginx

Link One Container into the Host Network

Veth Pair device, when it is created, has two network interfaces, a.k.a. Veth Peer. We may think it is a point-to-point network link. Thus, one side network interface send data packets will be received by the other network interface, even though one end in one network namespace, the other end in the other network namespace.

After we enter the Nginx container, we won’t see any network interface located in the host network. Rather, we will see the network interface eth0. It is exactly the one end of the Veth Peer.

root@f83827aca31f:/# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.2 netmask 255.255.0.0 broadcast 0.0.0.0

inet6 fe80::42:acff:fe11:2 prefixlen 64 scopeid 0x20<link>

ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet)

RX packets 2669 bytes 8909570 (8.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2454 bytes 200664 (195.9 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

We also are able to see container routing table.

root@d32ad39caa3b:/# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.17.0.1 0.0.0.0 UG 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

The network interface eth0 is the default routing network interface. For the second entry, all destination going to 172.17.0.0 will let eth0 network interface to forward.

The other end of the Veth Peer is located in host network.

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 0.0.0.0

inet6 fe80::42:bcff:fe5e:d16d prefixlen 64 scopeid 0x20<link>

ether 02:42:bc:5e:d1:6d txqueuelen 0 (Ethernet)

RX packets 8 bytes 544 (544.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 24 bytes 3316 (3.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

veth83f2c5c: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::6015:54ff:fe9c:39b9 prefixlen 64 scopeid 0x20<link>

ether 62:15:54:9c:39:b9 txqueuelen 0 (Ethernet)

RX packets 8 bytes 656 (656.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 40 bytes 5384 (5.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

After we use brctl show command, we will see the other end of Veth Peer veth83f2c5c is “attached” to the bridge device docker0.

# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242bc5ed16d no veth83f2c5c

So far, we are able to connect one container network into the host network by using Veth Pair. Next section, we will introduce how multiple containers in one host communicate with each other.

Multiple Containers Communication in One Host

Let’s start another Nginx container.

docker run -d --name nginx-host-2 nginx

We could see another Veth Peer network interface veth1b1d652 was attached to the docker0.

# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242bc5ed16d no veth1b1d652

veth83f2c5c

After we enter the container nginx-host and ping container nginx-host-2 IP address 172.17.0.3, we observe they are connected.

When we issue the ping command to destination 172.17.0.3, the local routing table will be checked by long prefix matching rule. The second entry will be match.

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.17.0.1 0.0.0.0 UG 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

The Gateway is 0.0.0.0. It means this route is a point-to-point link. All destinations which match this rule will go directly to the network interface eth0.

In order to construct ICMP (ping) packet, the destination MAC address is needed. The ARP (Address Resolution Protocol) broadcast packet will be send via eth0. It essentially broadcast the query that: who own the IP address 172.17.0.3, please let me know your MAC address.

The device eth0 has a pair device veth83f2c5c which is attached to bridge device docker0. All network interface attached to it are belong to one CSMA/CD area. Therefore, device docker0 will share the broadcast traffic to all other network interface, in our case, the device veth1b1d652. When container nginx-host-2 receives the ARP request, it will response the 172.17.0.3 MAC address back to container nginx-host. Thus, the ICMP packet with echo option is prepared and sent to the destination.

The following diagram illustrate the Ping network traffic flow.

iptables

When the network traffic from one network namespace travel to the other one, The Linux kernel Netfilter takes the important role. We could open iptables TRACE to watch the packets work flow.

$ iptables -t raw -A OUTPUT -p icmp -j TRACE

$ iptables -t raw -A PREROUTING -p icmp -j TRACE

After enabling the TRACE, we could watch the dmesg output.

[18414070.307075] TRACE: raw:PREROUTING:policy:5 IN=docker0 OUT= PHYSIN=veth83f2c5c MAC=02:42:ac:11:00:03:02:42:ac:11:00:02:08:00 SRC=172.17.0.2 DST=172.17.0.3 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=32106 DF PROTO=ICMP TYPE=8 CODE=0 ID=31596 SEQ=1

[18414070.307083] TRACE: mangle:PREROUTING:rule:1 IN=docker0 OUT= PHYSIN=veth83f2c5c MAC=02:42:ac:11:00:03:02:42:ac:11:00:02:08:00 SRC=172.17.0.2 DST=172.17.0.3 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=32106 DF PROTO=ICMP TYPE=8 CODE=0 ID=31596 SEQ=1

[18414070.307089] TRACE: mangle:PREROUTING_direct:return:1 IN=docker0 OUT= PHYSIN=veth83f2c5c MAC=02:42:ac:11:00:03:02:42:ac:11:00:02:08:00 SRC=172.17.0.2 DST=172.17.0.3 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=32106 DF PROTO=ICMP TYPE=8 CODE=0 ID=31596 SEQ=1

[18414070.307094] TRACE: mangle:PREROUTING:rule:2 IN=docker0 OUT= PHYSIN=veth83f2c5c MAC=02:42:ac:11:00:03:02:42:ac:11:00:02:08:00 SRC=172.17.0.2 DST=172.17.0.3 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=32106 DF PROTO=ICMP TYPE=8 CODE=0 ID=31596 SEQ=1

...

[18414070.307307] TRACE: mangle:FORWARD:rule:1 IN=docker0 OUT=docker0 PHYSIN=veth83f2c5c PHYSOUT=veth1b1d652 MAC=02:42:ac:11:00:03:02:42:ac:11:00:02:08:00 SRC=172.17.0.2 DST=172.17.0.3 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=32106 DF PROTO=ICMP TYPE=8 CODE=0 ID=31596 SEQ=1

[18414070.307312] TRACE: mangle:FORWARD_direct:return:1 IN=docker0 OUT=docker0 PHYSIN=veth83f2c5c PHYSOUT=veth1b1d652 MAC=02:42:ac:11:00:03:02:42:ac:11:00:02:08:00 SRC=172.17.0.2 DST=172.17.0.3 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=32106 DF PROTO=ICMP TYPE=8 CODE=0 ID=31596 SEQ=1

...

[18414070.307365] TRACE: mangle:POSTROUTING:rule:2 IN= OUT=docker0 PHYSIN=veth83f2c5c PHYSOUT=veth1b1d652 SRC=172.17.0.2 DST=172.17.0.3 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=32106 DF PROTO=ICMP TYPE=8 CODE=0 ID=31596 SEQ=1

[18414070.307369] TRACE: mangle:POSTROUTING_direct:return:1 IN= OUT=docker0 PHYSIN=veth83f2c5c PHYSOUT=veth1b1d652 SRC=172.17.0.2 DST=172.17.0.3 LEN=84 TOS=0x00 PREC=0x00 TTL=64 ID=32106 DF PROTO=ICMP TYPE=8 CODE=0 ID=31596 SEQ=1

Therefore, The containerized process, which is isolated in its own network namespace, will use Veth Pair and Host network bridge to communicate other containers and even outside the host.

Reference

Deep Dive Kubernetes: Lei Zhang, a TOC member of CNCF.